Role of Conversational AI in Digital Transformation

Conversational AI powered by Retrieval-Augmented Generation (RAG) is accelerating digital transformation across industries by enabling chatbots and virtual assistants to deliver accurate, context-aware, and up-to-date responses.

Ankit Patel

5/11/20253 min read

Adopting a modern, context-aware conversational AI chatbots are now accessible thanks to advances in open-source frameworks and efficient, small language models. By dynamically retrieving relevant information from internal and external knowledge bases, RAG-enhanced AI personalizes customer interactions, supports real-time decision-making, and streamlines operations in areas like sales, education, healthcare, and employee assistance.

This approach reduces misinformation, increases user trust, and allows organizations to leverage proprietary data for more targeted, efficient, and impactful digital experiences. This blog explores how to create a powerful virtual assistants or conversational AI chatbot using the LangChain framework, Ollama inference engine, Retrieval Augmented Generation (RAG) with knowledge base and chat history, a configurable vector store (Milvus or MongoDB), and a Vue/ReactJS-based UI. We’ll also discuss the benefits of using pre-trained micro/small LLMs (with 1B-7B parameters of Llama 3, Gemma 3, Qwen 3), highlighting architecture, limitations, and summarize deployment on edge devices.

Architecture Overview:

The chatbot system combines several cutting-edge technologies:

LangChain: Orchestrates LLMs, retrieval, and memory for context-aware conversations.

Ollama: Provides fast and cheap LLM inference for models like Llama 3, Gemma 3, Qwen 3.

RAG Chain: Enhances accuracy by grounding responses in external knowledge bases and chat history.

Vector Store (Milvus/MongoDB): Stores and retrieves high-dimensional embeddings for semantic search and context retrieval.

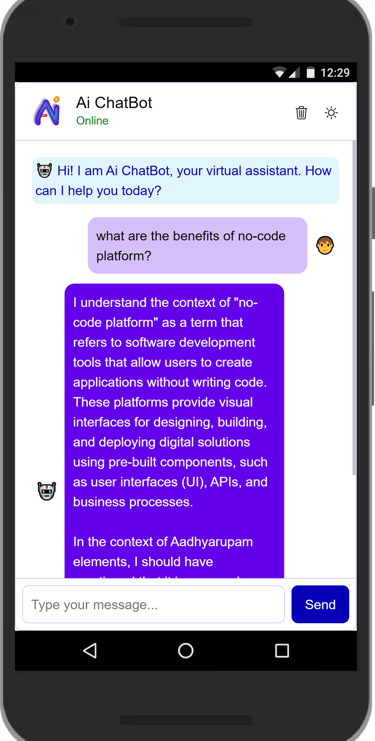

Vue/ReactJS UI: Delivers a responsive, interactive chat interface for end users.

Key Components and Their Roles:

LangChain Framework

Manages the conversational flow, memory, and prompt engineering.

Integrates seamlessly with Ollama for local inference and supports RAG pipelines for context-aware responses.

Ollama Inference Engine

Runs LLMs locally, enabling fast, private, and cost-effective inference.

Supports a range of models, including Llama 3, Gemma 3, Qwen 3, optimized for edge devices

Retrieval Augmented Generation (RAG)

Combines LLM generation with retrieval from a knowledge base and chat history.

Ensures responses are accurate, up-to-date, and contextually relevant.

Configurable Vector Store:

Milvus: Open-source, scalable, and optimized for vector search and AI workloads. Runs efficiently on laptops and clusters.

MongoDB: Flexible document database with vector search as an add-on, suitable for hybrid structured/unstructured data.

PGVector: PGVector is the extension from PostgreSQL offering simplified vector management and similarity search capabilities with increased storage efficiency.

Vue/ReactJS ChatBot UI

Lightweight, versatile, and easy to integrate.

Supports real-time chat, conversation history, and responsive design

Benefits of Pre-trained Micro/Small LLMs

Resource Efficiency: Micro/Small models(like Llama 3, Gemma 3 or Qwen 3 with 1B–7B parameters) require less memory and compute, making them ideal for edge deployment (laptops, mobiles, low-memory devices).

Fast Inference: Local execution via Ollama ensures low latency and high privacy.

Competitive Accuracy: While not as powerful as large models, micro/small LLMs deliver strong performance for most conversational and RAG tasks.

Open Source: Models like Llama 3, Gemma 3, Qwen 3 are available for customization and fine-tuning.

Limitations

Model Size vs. Capability: Micro/small LLMs may struggle with highly complex queries or nuanced reasoning compared to larger models.

Knowledge Freshness: LLMs require frequent updates or robust RAG pipelines to stay current.

Vector Store Overhead: Managing and scaling vector databases (especially on low-resource devices) can introduce complexity.

UI Complexity: Advanced features (e.g., multi-turn memory, real-time streaming) require careful frontend engineering.

Deployment, Scalability and Performance

Edge Deployment

Conversational AI stack is lightweight enough for laptops and some mobile devices.

Milvus offers embedded mode; MongoDB can run locally or in the cloud.

Performance

Inference latency is low with small models and local execution.

Vector search (Milvus) is optimized for fast retrieval, even at scale.

Accuracy and Scale

RAG chains with knowledge base and chat history boost factual accuracy and contextual relevance.

System scales from single-device deployments to distributed clusters.

Conclusion

By combining LangChain, Ollama, RAG, a flexible vector store, and a Vue/ReactJS UI, you can build a robust, context-aware chatbot that runs efficiently on edge devices. Leveraging pretrained micro/small LLMs like Llama 3 or Gemma 3 or Qwen 3 ensures fast, private, and cost-effective conversational AI, with the flexibility to scale as your needs grow.

Ready to build your own conversational AI chatbot? Reach out to Aadhyarupam innovators and unlock the power of local, context-rich AI for your users!

Aadhyarupam innovators

One stop software solution provider

OUR POLICIES

contact@aadhyarupam.com

© 2020-2025 Aadhyarupam. All rights reserved.

Terms of Use